A spiking neural network model of memory-based reinforcement learning

Filed under:

Computational neuroscience

Takashi Nakano (Okinawa Institute of Science and Technology), Makoto Otsuka (Okinawa Institute of Science and Technology), Junichiro Yoshimoto (Okinawa Institute of Science and Technology), Kenji Doya (Okinawa Institute of Science and Technology)

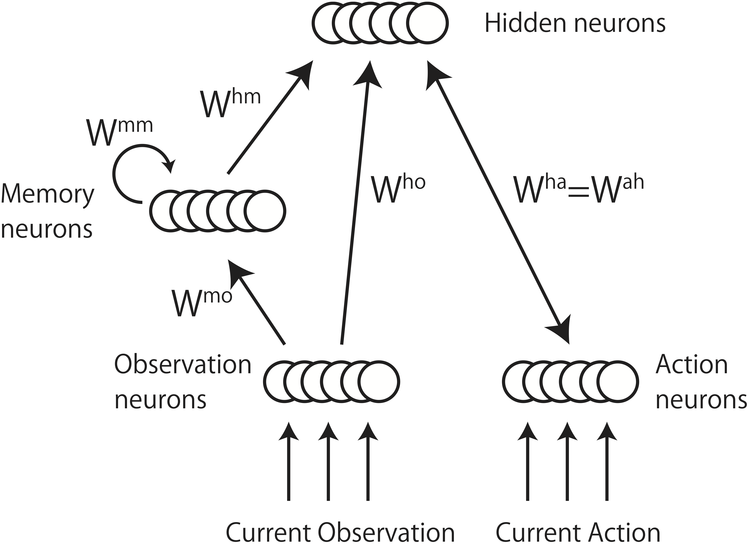

A reinforcement learning framework has been actively used for modeling animal’s decision making in the field of computational neuroscience. To elucidate a biologically plausible implementation of reinforcement learning algorithms, several spiking neural network models have been proposed. However, most of these models are unable to handle high-dimensional observations or past observations though these features are inevitable constraints of learning in the real environment. In this work, we propose a spiking neural network model of memory-based reinforcement learning that can solve partially observable Markov decision processes (POMDPs) with high-dimensional observations (see Figure).

The proposed model was inspired by a reinforcement learning framework proposed by [1], referred to as the free-energy-based reinforcement learning (FERL) here. The FERL possesses many desirable characteristics: an ability to handle high-dimensional observations and to form goal-directed internal representation; population coding of action-values; and a Hebbian learning rule modulated by reward prediction errors [2]. While the original FERL was implemented by a restricted Boltzmann machine (RBM), we devise the following extensions: replacing the binary stochastic nodes of the RBM by leaky integrate-and-fire neurons; and incorporating working memory architecture to keep temporal information of observations implicitly.

Our model solved reinforcement learning tasks with high-dimensional and uncertain observations without a prior knowledge of the environment. All desirable characteristics in FERL framework were preserved in this extension. The negative free-energy properly encoded the action-values. The free energy estimated by the spiking neural network was highly correlated with that estimated by the original RBM. Finally, the activation patterns of hidden neurons reflected the latent category behind high-dimensional observations in goal-oriented and action-dependent ways after reward-based learning.

1. Sallans, B. and Hinton, G. E.,Using Free Energies to Represent Q-values in a Multiagent Reinforcement learning Task. Advances in Neural Information Processing Systems 13 2001.

2. Otsuka, M., Yoshimoto, J., Doya, K., Robust population coding in free-energy-based reinforcement learning. International Conference on Artificial Neural Networks (ICANN) 2008, Part I: 377–386

A part of this study is the result of "Bioinformatics for brain sciences" carried out under the Strategic Research Program for Brain Sciences by the Ministry of Education, Culture, Sports, Science and Technology (MEXT) of Japan.

This work is also supported by the Strategic Programs for Innovative Research (SPIRE), MEXT, Japan.

The proposed model was inspired by a reinforcement learning framework proposed by [1], referred to as the free-energy-based reinforcement learning (FERL) here. The FERL possesses many desirable characteristics: an ability to handle high-dimensional observations and to form goal-directed internal representation; population coding of action-values; and a Hebbian learning rule modulated by reward prediction errors [2]. While the original FERL was implemented by a restricted Boltzmann machine (RBM), we devise the following extensions: replacing the binary stochastic nodes of the RBM by leaky integrate-and-fire neurons; and incorporating working memory architecture to keep temporal information of observations implicitly.

Our model solved reinforcement learning tasks with high-dimensional and uncertain observations without a prior knowledge of the environment. All desirable characteristics in FERL framework were preserved in this extension. The negative free-energy properly encoded the action-values. The free energy estimated by the spiking neural network was highly correlated with that estimated by the original RBM. Finally, the activation patterns of hidden neurons reflected the latent category behind high-dimensional observations in goal-oriented and action-dependent ways after reward-based learning.

1. Sallans, B. and Hinton, G. E.,Using Free Energies to Represent Q-values in a Multiagent Reinforcement learning Task. Advances in Neural Information Processing Systems 13 2001.

2. Otsuka, M., Yoshimoto, J., Doya, K., Robust population coding in free-energy-based reinforcement learning. International Conference on Artificial Neural Networks (ICANN) 2008, Part I: 377–386

A part of this study is the result of "Bioinformatics for brain sciences" carried out under the Strategic Research Program for Brain Sciences by the Ministry of Education, Culture, Sports, Science and Technology (MEXT) of Japan.

This work is also supported by the Strategic Programs for Innovative Research (SPIRE), MEXT, Japan.

Preferred presentation format:

Poster

Topic:

Computational neuroscience

Latest news for Neuroinformatics 2011

Latest news for Neuroinformatics 2011 Follow INCF on Twitter

Follow INCF on Twitter