Workshop 3

Chair: Tim Clark

Speakers: Cameron Neylon, Amarnath Gupta, Mercè Crosas

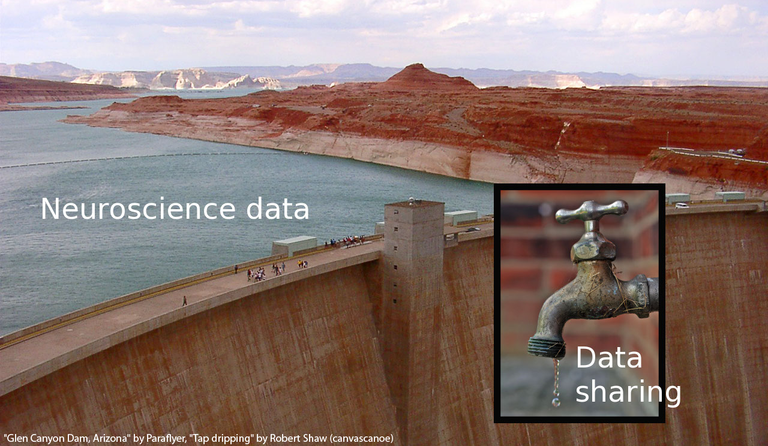

Much attention has been focused on the so-called "data deluge" (see for example, McFedries 2011). But in fact, the rapid growth in size of experimental datasets being processed on a routine basis now presents itself to the average scientist as a publications deluge with inadequately referenced experimental results.

While neuroscientists in earlier generations could frequently publish their results in graphical tables contained in their publications, the sheer size of today's datasets means that typically, only summarizations and figures representing the final results of data analysis, may be included in scientific articles. Where "supplemental data" is appended, it too often consists of figures summarizing an analysis of the original observations. And the computational processing steps (workflows) by which the data was reduced to the figures and charts seen in primary publications - that too is not typically stored in any robust persistent way.

This situation does not generate great confidence in the reproducibility of some experiments reported in the literature. And it certainly does not support the reuse of expensively produced data and computations. As a result several groups have now begun to take up the problem of persistently storing, citing, retrieving and reusing, both datasets and the computational workflows used to process them.

This workshop will spotlight presentations and a panel discussion on how to take these initial steps forward to produce a consistently verifiable and reproducible scientific literature derived from "big data".